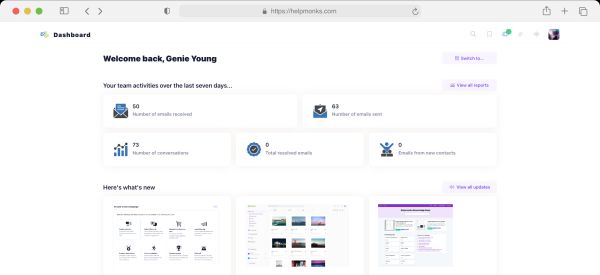

Introducing new pricing for Helpmonks

Discover Helpmonks' new pricing structure, featuring a flexible PRO plan, a FREE plan for smaller teams, and an exciting affiliate program. Experience unparalleled email management solutions today!

Read nowThe other day I set out to finally start synching one of my backup folders on one of our Ubuntu Servers with Amazon S3. The reasons for this, are obvious. Amazon S3 is very cheap and reliable and is a good fit for keeping “smaller” chunks of files as a backup storage. So, apart from writing a script that runs trough crontab, which was easy to write, I’ve spent far more time to get the S3 script running. So, here are the steps to sync any folder with Amazon S3.

First off, I created a additional bucket on S3. Let’s call this backup “mybackup”.

Since, I did not wanted to install anything additionally to run the S3 scripts (there is a very popular S3Rsync for Ruby out there) I wanted to get it running with the “bash” shell itself. Why install another language, if you got everything already, right?

So, I went ahead and downloaded the S3-bash scripts. As the name implies, these are scripts that work in the bash shell and nothing else is needed for it. Unfortunately, the documentation lacks big time, so I had to run all over the net to find some. Since they are very sparse, here are the steps to get it running.

After you have unpacked the scripts you get three scripts called;

Since we want to put files on S3, we are going to focus on the “s3-put” script. Here is a example how the commands for the s3-put script would look like:

s3-put -k {yourkey} -s awssecretfile -T /backup/myfile.zip /s3-backup/myfile.zip

The explanation of the params is as follows:

Now, that wasn’t so hard right? Well, there is one small thing that drove me crazy during my initial setup. That is that the file with my Amazon S3 secret key kept on throwing an error. Somehow the length was not matching and some other errors. After some digging around I figured that one has to write to the file again in order to get rid of the 41 bytes error message. To do that issue the following command:

cat awssecretfile | tr -d 'n' >> awssecretfile-new

Right, so I’m hoping this helps anyone out there. Have fun.

Discover Helpmonks' new pricing structure, featuring a flexible PRO plan, a FREE plan for smaller teams, and an exciting affiliate program. Experience unparalleled email management solutions today!

Read now

Dynamic email signatures increase brand visibility, build brand identity, and boost conversions. Learn how to create and update dynamic email signatures.

Read now

Looking for an email marketing automation software? This guide shows what to look for. We'll also review the best tools for your online marketing needs.

Read now

Using customer engagement solutions helps you keep your existing customer base and grow. Here are the top 10 customer engagement solutions for your business.

Read now

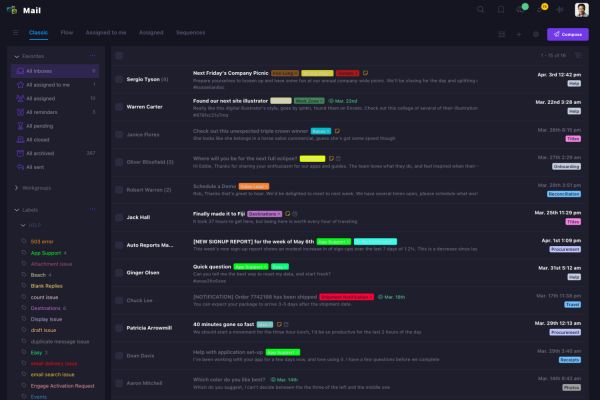

Empower your team and delight your customers.